Excel: Find Normal Characters For Unicode In Your Data!

Do you ever encounter text that looks like gibberish, a jumble of symbols and characters that bear little resemblance to the words you intended to read? This frustrating phenomenon, known as mojibake, is a surprisingly common consequence of mismatched character encodings and can render perfectly good data completely unreadable.

The fundamental issue lies in how computers store and interpret text. Every character, from the humble letter "a" to the more exotic characters with diacritics, is represented by a sequence of bytes. The interpretation of these bytes, however, depends entirely on the character encoding used. When the encoding used to read the bytes doesn't match the encoding used to write them, the result is mojibake.

For instance, a sequence of bytes might represent a hyphen in one encoding, but when interpreted with a different encoding, it could become something entirely different, perhaps a question mark, a diamond, or a series of seemingly random characters. This is why the same byte sequence can appear differently depending on the context.

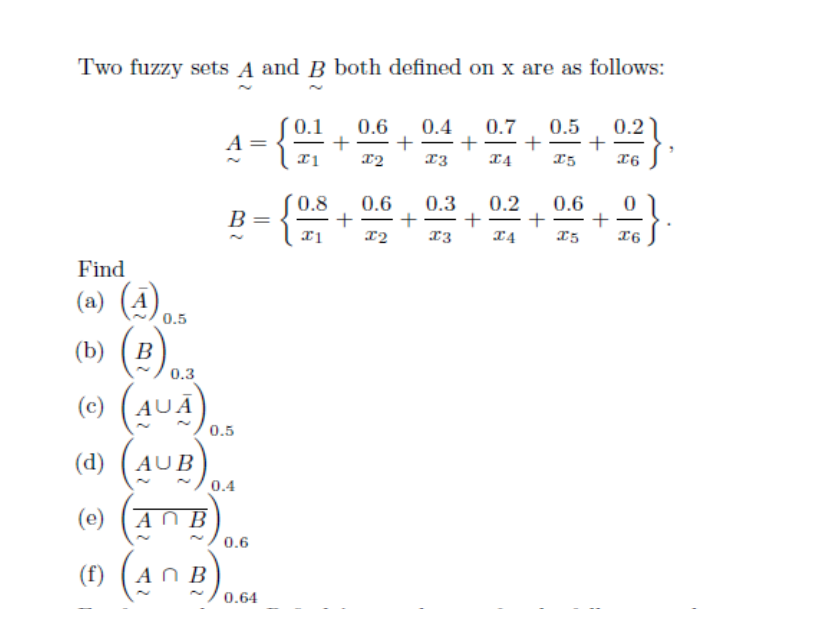

If you find yourself staring at \u00e2\u20ac\u201c instead of the intended hyphen, or \u00e2\u20ac\u0153 where a quotation mark should be, you're experiencing mojibake firsthand. While in some cases, like the hyphen example, you can use tools like Excel's find and replace to fix the data, you won't always know what the correct normal character should be. This is especially true when dealing with more complex characters or when the mojibake corrupts the original text beyond recognition.

So, is there a readily available function or a tool within Excel or other applications that can decipher what a specific mojibake sequence corresponds to in the original text? The answer is not always a simple yes, but there are strategies and resources that can help.

Mojibake can manifest in various forms, making the text difficult to decipher. The common issue is the missing or replaced characters, which is especially problematic in short words, or those beginning with specific characters, like those with accents such as , , or . Consider the cases where letters are unrelated to the problematic , , or are missing, leading to incomprehensible words. The problems compound when characters like those with tildes, diaeresis, or ring-above marks appear in the text.

Several extra encodings also have a pattern. Understanding these patterns is key to identifying the correct characters.

We're living in a digital age, where people are untethered from physical limitations, buying and renting movies online, downloading software, and sharing and storing files on the web. It's a testament to how much technology has changed our lives. One aspect of this transformation is the way we handle text, and it can lead to challenges.

Consider an example where you run an SQL command in phpMyAdmin to display the character sets. Then, consider the keyboard shortcuts designed for characters that don't have a dedicated key. By using the "e with accent alt code", you can type "e" with accent marks over it (, , , or , , , ) using your Windows keyboard.

This method offers a way to type symbols that do not have a key, making it easier to use. Consider the case of an airline offering deals on tickets, hotels, car rentals, and vacations. Similarly, an advantage member earns miles and everyday spending.

Understanding the importance of accurately representing characters extends to many languages. For example, in Italian, to write the accented capital vowels, you must use a key combination, holding the Alt key while typing the numbers from the right-hand column of the table.

For instance, a Latin small letter e with acute is code point u+00e9. This is a crucial detail. But if you interpret those two bytes as distinct code points u+00c3 and u+00a9, these are a Latin capital letter a with tilde and a copyright sign, respectively.

Let's look at some examples. Opt + e, then a = . Opt + e, then e = . Opt + e, then i = . Opt + e, then o = . Opt + e, then u = . For the , hold down the option key while you type the n, then type n again. Opt + n, then n = . To type an umlaut over the u, hold down the option key while pressing the u key, then type u again.

There is a need to change the pronunciation of a, e, o; distinguish between words that would otherwise be homographs; and have tools for teams to share knowledge. When you advertise and reach developers and technologists worldwide, be sure to highlight your product.

Consider the importance of understanding the phonics of the letter a. The letter "a" is for apple, ant, animal, armchair.

There are a few strategies one can use to identify and correct mojibake. The key is to identify the encoding used by the original text. A good starting point is to know the intended language of the text, as that can provide clues about the characters used. For example, if you know the text is in French, you can start by looking for encodings commonly used for French, such as ISO-8859-1 or UTF-8.

Next, one method is to try different character encodings in a text editor. Most modern text editors, like Notepad++, Sublime Text, or Visual Studio Code, allow you to open a file and change the encoding. By trying different encodings, you might stumble upon the correct one, which will display the text correctly. This is often a trial-and-error process, but it can be surprisingly effective.

Another useful approach is to use online tools designed to identify and convert character encodings. Websites like "CyberChef" offer a "Magic" function that can often detect the encoding of a text and convert it to a more readable form. These tools can be particularly helpful when you're unsure about the original encoding.

If you know some of the original text or a few of the characters, you can use those clues to identify the correct encoding. For instance, if you see "" and "", it suggests that the original text was likely encoded using a character set that supports those characters, such as ISO-8859-1 or UTF-8.

Unicode is a universal character encoding standard that provides a unique number for every character, regardless of the platform, program, or language. The primary goal of Unicode is to provide a unique number for every character, in all the languages, so that computer programs, devices, and services can handle it consistently.

UTF-8, UTF-16, and UTF-32 are different ways to encode Unicode characters. UTF-8 is the most widely used encoding on the web. It uses one to four bytes to represent each character, and is backward compatible with ASCII, meaning that the first 128 characters (the ASCII character set) are represented by a single byte and are identical to their ASCII counterparts. UTF-16 uses two or four bytes to represent characters, and UTF-32 uses four bytes for every character. The advantage of UTF-8 is its efficiency, while the advantages of UTF-16 and UTF-32 is their ability to represent a larger range of characters. However, they can be less space-efficient than UTF-8 for text that primarily uses ASCII characters.

It's essential to ensure that the software you are using, like text editors, databases, and programming languages, are configured to use the correct encoding when you are working with text. Otherwise, the problem of mojibake will persist. If your database is configured to use UTF-8, make sure that the data you import is also encoded in UTF-8.

In spreadsheets, when you encounter mojibake, the solution is to identify the correct encoding, import the data into a text editor that supports the correct encoding, and then copy and paste the corrected data back into the spreadsheet. Many spreadsheet programs offer import options that allow you to specify the encoding of the file, thereby ensuring that the data is displayed correctly from the start.

As you delve into the world of character encodings, it's important to understand a few basic concepts. A "character set" is a defined set of characters, such as the letters of the alphabet, numbers, and punctuation marks. "Encoding" is how the characters are represented by a sequence of bytes. Different encodings, such as UTF-8, ISO-8859-1, and others, are used to represent the characters in a specific character set. The most important consideration when handling text is to be consistent in the encodings used for storing, processing, and displaying the text.

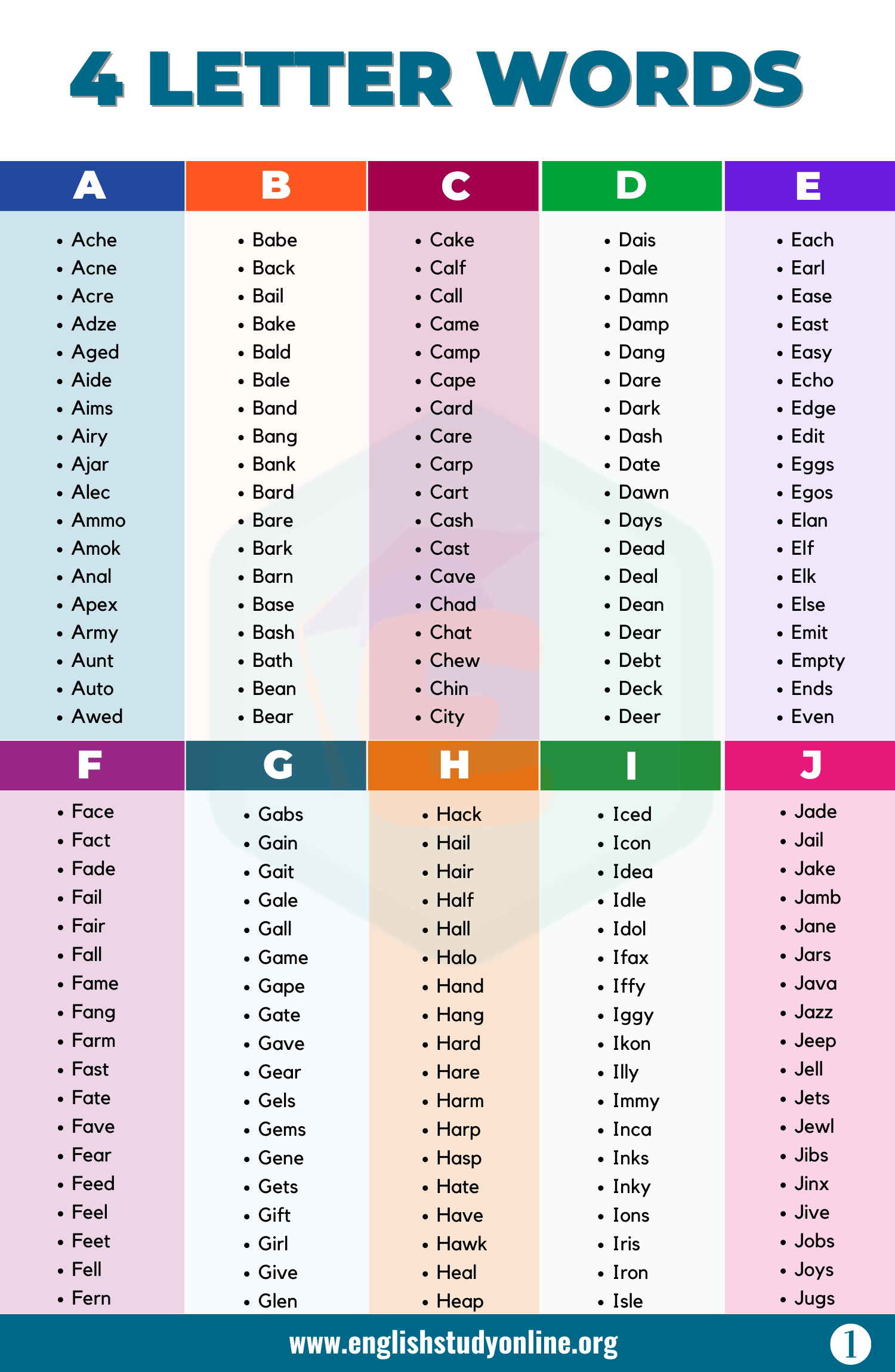

When you are sorting words for children, for example, understanding the different spelling patterns can be helpful. Set up the headers, and explain how to sort words by patterns. Then, read the words and discuss any unknown words.

When using Windows keyboard, it is possible to type "e" with any accent mark over it using the Alt code. This code is designed for typing symbols that do not have a dedicated key on the keyboard. Similarly, you can learn about the phonics of the letter "a".

One such approach is to utilize online tools that are designed for character encoding identification and conversion. "CyberChef" website also offers a "Magic" feature which can help identify the encoding of text, and convert it into a more readable form. These tools come in handy when you are uncertain about the original encoding.

Consider the case of a Latin small letter e with acute which has code point u+00e9. But when you look at those two bytes and consider them separate code points u+00c3 and u+00a9, they are Latin capital letter a with tilde and a copyright sign respectively.

When you quickly explore any character in a unicode string, just type in the character or word, or even an entire paragraph. With this, one can see that the diaeresis is one of the easiest cases to deal with. The diaeresis, or the two dots, signifies that the underlying "e" is pronounced as // (as "e" in "bet").

This open e is used in groups of vowels that would otherwise be pronounced differently.